Uncertainty quantification for AI systems refers to the process of measuring and expressing the degree of confidence or uncertainty in a model’s predictions or inferences.

This is particularly valuable for critical and high-stakes applications, such as in medicine or autonomous driving. In these applications, it is not just important what an AI predicts, but also how reliable that prediction is. Recognizing uncertainties can prevent overconfidence (automation bias) and inform more trustworthy decisions. This is why uncertainty quantification is an Explainable AI technique. Not only does it enhance transparency, it also supports model debugging and risk management, turning the vague idea that “a model could be wrong” into concrete numbers.

Example Methods

- Bayesian Methods: the main idea is to use probability distributions over model parameters to estimate prediction uncertainty

- Monte Carlo Simulation: Generate many possible model outputs with randomized inputs to understand the distribution (commonly used for deep learning and parametric models)

- Ensemble Methods: Aggregate predictions from multiple models trained with different initializations or data splits to estimate consensus and variance:

- Monte Carlo Dropout: Keep dropout active during prediction (model-specific technique), running multiple passes to observe the spread of outputs

Uncertainty in AI predictions can be presented to users through intuitive visualizations, such as confidence intervals, probability distributions, or graphical indicators like bars and colored overlays that denote levels of certainty.

Further Reading

- A Survey of Uncertainty in Deep Neural Networks (2023): provides a great overview of the methods given above (also great work led by a former co-author from Jena)

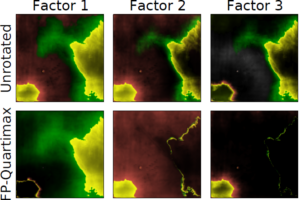

Some of my own work on the topic: Structuring Uncertainty for Fine-Grained Sampling in Stochastic Segmentation Networks (NeurIPS 2022)

In this work, we explored Stochastic Segmentation Networks for image segmentation. These networks predict segmentation uncertainty, which we structure into meaningful components, as seen in the bottom row of the image. This adds a layer of explainability of the AI output to humans. Through our user interface, humans can remix the contributions of individual components and thereby adjust the overall segmentation in a controlled manner.