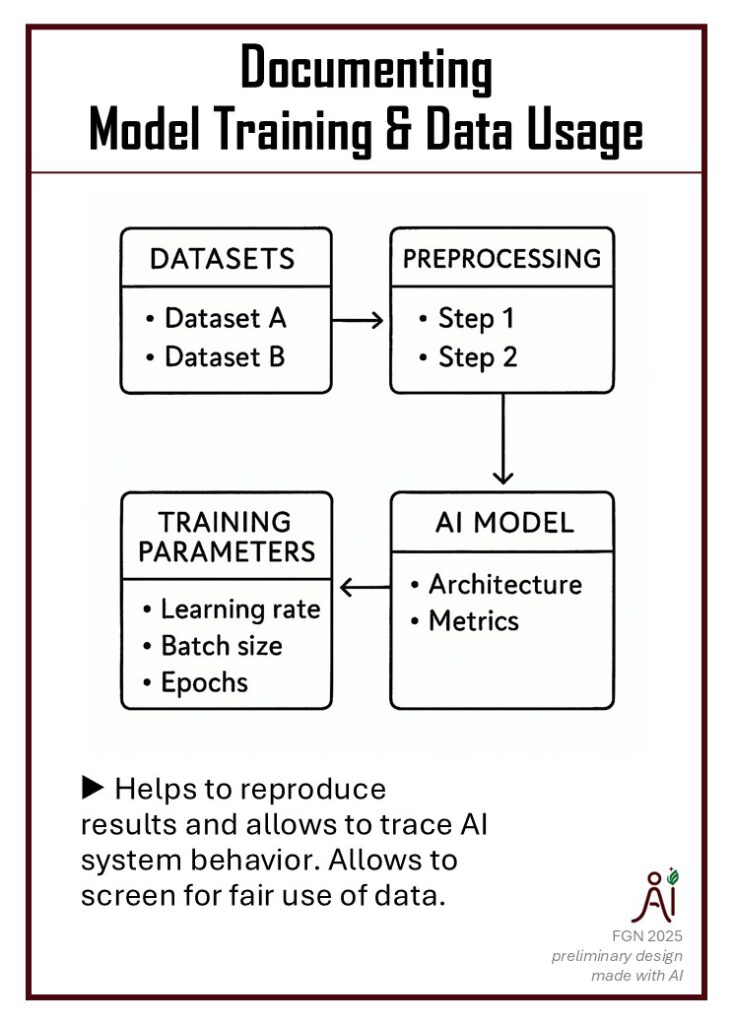

Documenting both how training data is collected and used, as well as how AI models are trained, helps build trust, accountability, and clarity for different stakeholders:

- Developers and researchers benefit from reproducibility, better debugging, and model optimization

- Business leaders and clients obtain transparency about data sources and ethical safeguards, supporting compliance and brand credibility

- Regulators and policymakers get equipped for oversight, risk assessment, and alignment with legal frameworks

- End-users and the public benefit from confidence that models are fair, explainable, and have clear privacy terms.

Documentation tools

MLOps Tools with Experiment Tracking and Documentation Features:

- MLflow: Open-source platform to manage the ML lifecycle, including experiment tracking.

- TensorBoard: A Visualization tool integrated with TensorFlow for tracking and visualizing experiments.

- Cloud platforms (if compliant): Azure ML, Neptune.ai, Comet.ml.

- Note: AI tools are becoming increasingly available and capable for coding and documentation tasks (E.g., Copilot, Claude).

Further Reading

- EU AI Act

- EU rules on transparency and risk obligations come into force (News 2025): Requires detailed technical documentation of AI training and testing, publication of training data summaries, risk assessments, and transparency measures for model providers.

- Guidelines on the scope of obligations for providers of general-purpose AI models under the AI Act | Shaping Europe’s digital future (accessed 17th of September 2025)